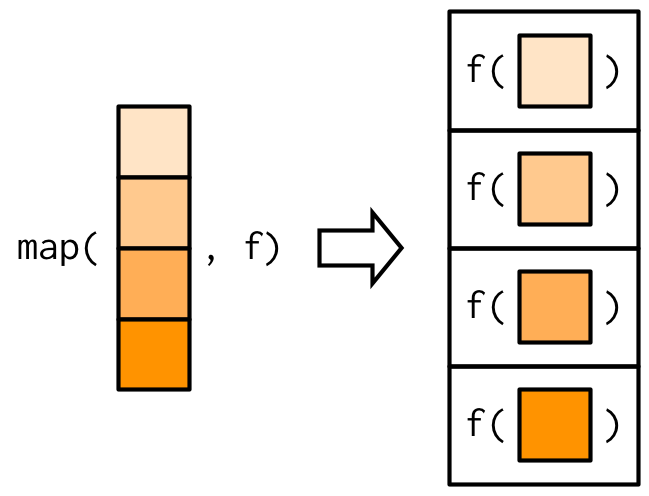

class: title-slide, center <span class="fa-stack fa-4x"> <i class="fa fa-circle fa-stack-2x" style="color: #ffffff;"></i> <strong class="fa-stack-1x" style="color:#E7553C;">1</strong> </span> # Predicting ## Introduction to Machine Learning in the Tidyverse ### Alison Hill · Garrett Grolemund #### [https://conf20-intro-ml.netlify.com/](https://conf20-intro-ml.netlify.com/) · [https://rstd.io/conf20-intro-ml](https://rstd.io/conf20-intro-ml) --- class: middle, center, frame # How do we pick? -- **Which** .display[data] -- **Which** .display[criteria] -- **Which** .display[model] ??? This creates a large practical difference between Machine Learning and Hypothesis testing. At the end of the day, Machine Learners will evaluate _many_ different types of models for a single problem. --- name: ml-goal class: middle, center, frame # Goal of Machine Learning -- ## generate accurate predictions --- name: predictions class: middle, center, frame # Goal of Machine Learning ## 🔮 generate accurate .display[predictions] --- class: middle # .center[`lm()`] ```r lm_ames <- lm(Sale_Price ~ Gr_Liv_Area, data = ames) lm_ames ## ## Call: ## lm(formula = Sale_Price ~ Gr_Liv_Area, data = ames) ## ## Coefficients: ## (Intercept) Gr_Liv_Area ## 13289.6 111.7 ``` ??? So let's start with prediction. To predict, we have to have two things: a model to generate predictions, and data to predict --- name: step1 background-image: url("images/predicting/predicting.001.jpeg") background-size: contain --- class: middle, center # Quiz How many R functions can you think of that do some type of linear regression? -- `glmnet` for regularized regression `stan` for Bayesian regression `keras` for regression using tensorflow `spark` for large data sets ... --- class: inverse, middle, center # How would we do this with parsnip? <img src="https://raw.githubusercontent.com/rstudio/hex-stickers/master/PNG/parsnip.png" width="20%" /> --- class: middle, frame # .center[To specify a model with parsnip] .right-column[ 1\. Pick a .display[model] 2\. Set the .display[engine] 3\. Set the .display[mode] (if needed) ] --- class: middle, frame # .center[To specify a model with parsnip] ```r decision_tree() %>% set_engine("C5.0") %>% set_mode("classification") ``` --- class: middle, frame # .center[To specify a model with parsnip] ```r nearest_neighbor() %>% set_engine("kknn") %>% set_mode("regression") %>% ``` --- class: middle, frame .fade[ # .center[To specify a model with parsnip] ] .right-column[ 1\. Pick a .display[model] .fade[ 2\. Set the .display[engine] 3\. Set the .display[mode] (if needed) ] ] --- class: middle, center # 1\. Pick a .display[model] All available models are listed at <https://tidymodels.github.io/parsnip/articles/articles/Models.html> <iframe src="https://tidymodels.github.io/parsnip/articles/articles/Models.html" width="504" height="400px"></iframe> --- class: middle .center[ # `linear_reg()` Specifies a model that uses linear regression ] ```r linear_reg(mode = "regression", penalty = NULL, mixture = NULL) ``` --- class: middle .center[ # `linear_reg()` Specifies a model that uses linear regression ] ```r linear_reg( mode = "regression", # "default" mode, if exists penalty = NULL, # model hyper-parameter mixture = NULL # model hyper-parameter ) ``` --- class: middle, frame .fade[ # .center[To specify a model with parsnip] ] .right-column[ .fade[ 1\. Pick a .display[model] ] 2\. Set the .display[engine] .fade[ 3\. Set the .display[mode] (if needed) ] ] --- class: middle, center # `set_engine()` Adds an engine to power or implement the model. ```r lm_spec %>% set_engine(engine = "lm", ...) ``` --- class: middle, frame .fade[ # .center[To specify a model with parsnip] ] .right-column[ .fade[ 1\. Pick a .display[model] 2\. Set the .display[engine] ] 3\. Set the .display[mode] (if needed) ] --- class: middle, center # `set_mode()` Sets the class of problem the model will solve, which influences which output is collected. Not necessary if mode is set in Step 1. ```r lm_spec %>% set_mode(mode = "regression") ``` --- class: your-turn # Your turn 1 Write a pipe that creates a model that uses `lm()` to fit a linear regression. Save it as `lm_spec` and look at the object. What does it return? *Hint: you'll need https://tidymodels.github.io/parsnip/articles/articles/Models.html* <div class="countdown" id="timer_5e46e4db" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">03</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- ```r lm_spec <- linear_reg() %>% # Pick linear regression set_engine(engine = "lm") # set engine lm_spec ## Linear Regression Model Specification (regression) ## ## Computational engine: lm ``` --- class: middle, center # `fit_data()` Train a model by fitting a model. Returns a parsnip model fit. ```r fit_data(Sale_Price ~ Gr_Liv_Area, model = lm_spec, data = ames) ``` --- class: middle .center[ # `fit_data()` Train a model by fitting a model. Returns a parsnip model fit. ] ```r fit_data( Sale_Price ~ Gr_Liv_Area, # a formula model = lm_spec, # parsnip model data = ames # dataframe ) ``` --- class: your-turn # Your turn 2 Double check. Does ```r lm_fit <- fit_data(Sale_Price ~ Gr_Liv_Area, model = lm_spec, data = ames) lm_fit ``` give the same results as ```r lm(Sale_Price ~ Gr_Liv_Area, data = ames) ``` <div class="countdown" id="timer_5e46e33e" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">02</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- ```r lm(Sale_Price ~ Gr_Liv_Area, data = ames) ## ## Call: ## lm(formula = Sale_Price ~ Gr_Liv_Area, data = ames) ## ## Coefficients: ## (Intercept) Gr_Liv_Area ## 13289.6 111.7 ``` --- ```r lm_fit ## ══ Workflow [trained] ═══════════════════════════════════════════════════════════════════════════════════════════════════════ ## Preprocessor: Formula ## Model: linear_reg() ## ## ── Preprocessor ───────────────────────────────────────────────────────────────────────────────────────────────────────────── ## Sale_Price ~ Gr_Liv_Area ## ## ── Model ──────────────────────────────────────────────────────────────────────────────────────────────────────────────────── ## ## Call: ## stats::lm(formula = formula, data = data) ## ## Coefficients: ## (Intercept) Gr_Liv_Area ## 13289.6 111.7 ``` --- name: handout class: center, middle data `(x, y)` + model = fitted model --- class: center, middle # Show of hands How many people have used a fitted model to generate .display[predictions] with R? --- template: step1 --- name: step2 background-image: url("images/predicting/predicting.003.jpeg") background-size: contain --- class: middle, center # `predict()` Use a fitted model to predict new `y` values from data. Returns a tibble. ```r predict(lm_fit, new_data = ames) ``` --- ```r lm_fit %>% predict(new_data = ames) ## # A tibble: 2,930 x 1 ## .pred ## <dbl> ## 1 198255. ## 2 113367. ## 3 161731. ## 4 248964. ## 5 195239. ## 6 192447. ## 7 162736. ## 8 156258. ## 9 193787. ## 10 214786. ## # … with 2,920 more rows ``` --- name: lm-predict class: middle, center # Predictions <img src="figs/01-Predicting/lm-predict-1.png" width="504" style="display: block; margin: auto;" /> --- class: your-turn # Your turn 3 Fill in the blanks. Use `predict()` to 1. Use your linear model to predict sale prices; save the tibble as `price_pred` 1. Add a pipe and use `mutate()` to add a column with the observed sale prices; name it `truth` *Hint: Be sure to remove every `_` before running the code!* <div class="countdown" id="timer_5e46e314" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">02</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- ```r lm_fit <- fit_data(Sale_Price ~ Gr_Liv_Area, model = lm_spec, data = ames) price_pred <- lm_fit %>% predict(new_data = ames) %>% mutate(truth = ames$Sale_Price) price_pred ## # A tibble: 2,930 x 2 ## .pred truth ## <dbl> <int> ## 1 198255. 215000 ## 2 113367. 105000 ## 3 161731. 172000 ## 4 248964. 244000 ## 5 195239. 189900 ## 6 192447. 195500 ## 7 162736. 213500 ## 8 156258. 191500 ## 9 193787. 236500 ## 10 214786. 189000 ## # … with 2,920 more rows ``` --- template: handout -- data `(x)` + fitted model = predictions --- template: predictions --- name: accurate-predictions class: middle, center, frame # Goal of Machine Learning ## 🎯 generate .display[accurate predictions] ??? Now we have predictions from our model. What can we do with them? If we already know the truth, that is, the outcome variable that was observed, we can compare them! --- class: middle, center, frame # Axiom Better Model = Better Predictions (Lower error rate) --- template: lm-predict --- class: middle, center # Residuals <img src="figs/01-Predicting/lm-resid-1.png" width="504" style="display: block; margin: auto;" /> --- class: middle, center # Residuals The difference between the predicted and observed values. $$ \hat{y}_i - {y}_i$$ ??? refers to a single residual. Since residuals are errors, the sum of the errors would be a good measure of total error except for two things. What's one of them? --- class: middle, center # Quiz What could go wrong? $$ \sum_{i=1}^n\hat{y}_i - {y}_i$$ ??? First, the sum would increase every time we add a new data point. That means models fit on larger data sets would have bigger errors than models fit on small data sets. That makes no sense, so we work with the mean error. --- class: middle, center # Quiz What could go wrong? $$ \frac{1}{n} \sum_{i=1}^n\hat{y}_i - {y}_i$$ ??? What else makes this an insufficient measure of error? Positive and negative residuals would cancel each other out. We can fix that by taking the absolute value of each residual... --- class: middle, center # Quiz What could go wrong? $$ \frac{1}{n} \sum_{i=1}^n |\hat{y}_i - {y}_i|$$ .footnote[Mean Absolute Error] ??? ...but absolute values are hard to work with mathematically. They're not differentiable at zero. That's not a big deal to us because we can use computers. But it mattered in the past, and as a result statisticians used the square instead, which also penalizes large residuals more than smaller residuals. The square version also has some convenient throretical properties. It's the standard deviation of the residuals about zero. So we will use the square. --- class: middle, center # Quiz What could go wrong? $$ \frac{1}{n} \sum_{i=1}^n (\hat{y}_i - {y}_i)^2$$ ??? If you take the square to return things to the same units as the residuals, you have the the root mean square error. --- class: middle, center # Quiz What could go wrong? $$ \sqrt{\frac{1}{n} \sum_{i=1}^n (\hat{y}_i - {y}_i)^2 }$$ .footnote[Root Mean Squared Error] --- class: middle, center # RMSE Root Mean Squared Error - The standard deviation of the residuals about zero. $$ \sqrt{\frac{1}{n} \sum_{i=1}^n (\hat{y}_i - {y}_i)^2 }$$ --- class: middle, center # `rmse()*` Calculates the RMSE based on two columns in a dataframe: The .display[truth]: `\({y}_i\)` The predicted .display[estimate]: `\(\hat{y}_i\)` ```r rmse(data, truth, estimate) ``` .footnote[`*` from `yardstick`] --- ```r lm_fit <- fit_data(Sale_Price ~ Gr_Liv_Area, model = lm_spec, data = ames) price_pred <- lm_fit %>% predict(new_data = ames) %>% mutate(price_truth = ames$Sale_Price) *rmse(price_pred, truth = price_truth, estimate = .pred) ## # A tibble: 1 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 56505. ``` --- template: step1 --- template: step2 --- name: step3 background-image: url("images/predicting/predicting.004.jpeg") background-size: contain --- template: handout -- data `(x)` + fitted model = predictions -- data `(y)` + predictions = metrics --- class: middle, center, inverse A model doesn't have to be a straight line! --- exclude: true ```r rt_spec <- decision_tree() %>% set_engine(engine = "rpart") %>% set_mode("regression") rt_fit <- fit_data(Sale_Price ~ Gr_Liv_Area, model = rt_spec, data = ames) price_pred <- predict(rt_fit, new_data = ames) %>% mutate(price_truth = ames$Sale_Price) rmse(price_pred, truth = price_truth, estimate = .pred) ``` --- class: middle, center <img src="figs/01-Predicting/unnamed-chunk-26-1.png" width="504" /> --- class: middle, center <img src="figs/01-Predicting/unnamed-chunk-27-1.png" width="504" /> --- class: middle, inverse, center # Do you trust it? --- class: middle, inverse, center # Overfitting --- <img src="figs/01-Predicting/unnamed-chunk-29-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-30-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-31-1.png" width="504" style="display: block; margin: auto;" /> --- .pull-left[ <img src="figs/01-Predicting/unnamed-chunk-33-1.png" width="504" style="display: block; margin: auto;" /> ] .pull-right[ <img src="figs/01-Predicting/unnamed-chunk-34-1.png" width="504" style="display: block; margin: auto;" /> ] --- class: your-turn # Your turn 4 .pull-left[ In your teams, decide which model: 1. Has the smallest residuals 2. Will have lower prediction error. Why? ] .pull-right[ <img src="figs/01-Predicting/unnamed-chunk-35-1.png" width="50%" /><img src="figs/01-Predicting/unnamed-chunk-35-2.png" width="50%" /> ] <div class="countdown" id="timer_5e46e421" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">01</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">30</span></code> </div> --- <img src="figs/01-Predicting/unnamed-chunk-37-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-38-1.png" width="504" style="display: block; margin: auto;" /> --- class: middle, center, frame # Axiom 1 The best way to measure a model's performance at predicting new data is to .display[predict new data]. --- class: middle, center, frame # Goal of Machine Learning -- ## 🔨 construct .display[models] that -- ## 🎯 generate .display[accurate predictions] -- ## 🆕 for .display[future, yet-to-be-seen data] -- .footnote[Max Kuhn & Kjell Johnston, http://www.feat.engineering/] ??? But need new data... --- class: middle, center, frame # Method #1 ## The holdout method --- <img src="figs/01-Predicting/all-split-1.png" width="864" /> ??? We refer to the group for which we know the outcome, and use to develop the algorithm, as the training set. We refer to the group for which we pretend we don’t know the outcome as the test set. --- class: center # `initial_split()` "Splits" data randomly into a single testing and a single training set. ```r initial_split(data, prop = 3/4) ``` --- ```r ames_split <- initial_split(ames, prop = 0.75) ames_split ## <2198/732/2930> ``` ??? data splitting --- class: center # `training()` and `testing()` Extract training and testing sets from an rsplit ```r training(ames_split) testing(ames_split) ``` --- ```r train_set <- training(ames_split) train_set ## # A tibble: 2,198 x 81 ## MS_SubClass MS_Zoning Lot_Frontage Lot_Area Street Alley Lot_Shape ## <fct> <fct> <dbl> <int> <fct> <fct> <fct> ## 1 One_Story_… Resident… 141 31770 Pave No_A… Slightly… ## 2 One_Story_… Resident… 80 11622 Pave No_A… Regular ## 3 One_Story_… Resident… 81 14267 Pave No_A… Slightly… ## 4 One_Story_… Resident… 93 11160 Pave No_A… Regular ## 5 Two_Story_… Resident… 74 13830 Pave No_A… Slightly… ## 6 Two_Story_… Resident… 78 9978 Pave No_A… Slightly… ## 7 One_Story_… Resident… 41 4920 Pave No_A… Regular ## 8 One_Story_… Resident… 43 5005 Pave No_A… Slightly… ## 9 One_Story_… Resident… 39 5389 Pave No_A… Slightly… ## 10 Two_Story_… Resident… 60 7500 Pave No_A… Regular ## # … with 2,188 more rows, and 74 more variables: Land_Contour <fct>, ## # Utilities <fct>, Lot_Config <fct>, Land_Slope <fct>, Neighborhood <fct>, ## # Condition_1 <fct>, Condition_2 <fct>, Bldg_Type <fct>, House_Style <fct>, ## # Overall_Qual <fct>, Overall_Cond <fct>, Year_Built <int>, ## # Year_Remod_Add <int>, Roof_Style <fct>, Roof_Matl <fct>, ## # Exterior_1st <fct>, Exterior_2nd <fct>, Mas_Vnr_Type <fct>, ## # Mas_Vnr_Area <dbl>, Exter_Qual <fct>, Exter_Cond <fct>, Foundation <fct>, ## # Bsmt_Qual <fct>, Bsmt_Cond <fct>, Bsmt_Exposure <fct>, ## # BsmtFin_Type_1 <fct>, BsmtFin_SF_1 <dbl>, BsmtFin_Type_2 <fct>, ## # BsmtFin_SF_2 <dbl>, Bsmt_Unf_SF <dbl>, Total_Bsmt_SF <dbl>, Heating <fct>, ## # Heating_QC <fct>, Central_Air <fct>, Electrical <fct>, First_Flr_SF <int>, ## # Second_Flr_SF <int>, Low_Qual_Fin_SF <int>, Gr_Liv_Area <int>, ## # Bsmt_Full_Bath <dbl>, Bsmt_Half_Bath <dbl>, Full_Bath <int>, ## # Half_Bath <int>, Bedroom_AbvGr <int>, Kitchen_AbvGr <int>, ## # Kitchen_Qual <fct>, TotRms_AbvGrd <int>, Functional <fct>, ## # Fireplaces <int>, Fireplace_Qu <fct>, Garage_Type <fct>, ## # Garage_Finish <fct>, Garage_Cars <dbl>, Garage_Area <dbl>, ## # Garage_Qual <fct>, Garage_Cond <fct>, Paved_Drive <fct>, ## # Wood_Deck_SF <int>, Open_Porch_SF <int>, Enclosed_Porch <int>, ## # Three_season_porch <int>, Screen_Porch <int>, Pool_Area <int>, ## # Pool_QC <fct>, Fence <fct>, Misc_Feature <fct>, Misc_Val <int>, ## # Mo_Sold <int>, Year_Sold <int>, Sale_Type <fct>, Sale_Condition <fct>, ## # Sale_Price <int>, Longitude <dbl>, Latitude <dbl> ``` --- class: middle, center # Quiz Now that we have training and testing sets... -- Which dataset do you think we use for .display[fitting]? -- Which do we use for .display[predicting]? --- template: step1 --- template: step2 --- template: step3 background-image: url("images/predicting/predicting.004.jpeg") background-size: contain --- name: holdout-step1 background-image: url("images/predicting/predicting.005.jpeg") background-size: contain --- name: holdout-step2 background-image: url("images/predicting/predicting.006.jpeg") background-size: contain --- name: holdout-step3 background-image: url("images/predicting/predicting.007.jpeg") background-size: contain --- name: holdout-step4 background-image: url("images/predicting/predicting.008.jpeg") background-size: contain --- name: holdout background-image: url("images/predicting/predicting.009.jpeg") background-size: contain --- class: your-turn # Your turn 5 Fill in the blanks. Use `initial_split()`, `training()`, `testing()`, `lm()` and `rmse()` to: 1. Split **ames** into training and test sets. Save the rsplit! 1. Extract the training data. Fit a linear model to it. Save the model! 1. Measure the RMSE of your linear model with your test set. Keep `set.seed(100)` at the start of your code. <div class="countdown" id="timer_5e46e1f1" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">04</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- ```r set.seed(100) # Important! ames_split <- initial_split(ames) ames_train <- training(ames_split) ames_test <- testing(ames_split) lm_fit <- fit_data(Sale_Price ~ Gr_Liv_Area, model = lm_spec, data = ames_train) price_pred <- lm_fit %>% predict(new_data = ames_test) %>% mutate(price_truth = ames_test$Sale_Price) rmse(price_pred, truth = price_truth, estimate = .pred) ``` RMSE = 53884.78; compare to 56504.88 --- class: middle, center .pull-left[ ### Training RMSE = 57367.26 <img src="figs/01-Predicting/unnamed-chunk-46-1.png" width="504" /> ] -- .pull-right[ ### Testing RMSE = 53884.78 <img src="figs/01-Predicting/lm-test-resid-1.png" width="504" /> ] --- name: holdout-handout class: center, middle old data `(x, y)` + model = fitted model -- new data `(x)` + fitted model = predictions -- new data `(y)` + predictions = metrics --- class: middle, center # Quiz How much data should you set aside for testing? -- If .display[testing set] is small, performance metrics may be unreliable -- If .display[training set] is small, model fit may be poor --- class: middle, center, inverse # Stratified sampling --- <img src="figs/01-Predicting/unnamed-chunk-48-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-49-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-50-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-51-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-52-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-53-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-54-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-55-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-56-1.png" width="504" style="display: block; margin: auto;" /> --- <img src="figs/01-Predicting/unnamed-chunk-57-1.png" width="504" style="display: block; margin: auto;" /> --- ```r set.seed(100) # Important! ames_split <- initial_split(ames, * strata = Sale_Price, * breaks = 4) ames_train <- training(ames_split) ames_test <- testing(ames_split) lm_fit <- fit_data(Sale_Price ~ Gr_Liv_Area, model = lm_spec, data = ames_train) price_pred <- lm_fit %>% predict(new_data = ames_test) %>% mutate(price_truth = ames_test$Sale_Price) rmse(price_pred, truth = price_truth, estimate = .pred) ``` --- class: middle, inverse .left-column[ # A bit messy, no? ] .right-column[ ```r set.seed(100) # Important! ames_split <- initial_split(ames, * strata = Sale_Price, * breaks = 4) ames_train <- training(ames_split) ames_test <- testing(ames_split) lm_fit <- fit_data(Sale_Price ~ Gr_Liv_Area, model = lm_spec, data = ames_train) price_pred <- lm_fit %>% predict(new_data = ames_test) %>% mutate(price_truth = ames_test$Sale_Price) rmse(price_pred, truth = price_truth, estimate = .pred) ``` ] --- class: middle, center # `fit_split()` Trains and tests a model with split data. Returns a tibble. ```r fit_split(Sale_Price ~ Gr_Liv_Area, model, split) ``` --- ```r lm_split <- fit_split(Sale_Price ~ Gr_Liv_Area, model = lm_spec, * split = ames_split) lm_split ## # # Monte Carlo cross-validation (0.75/0.25) with 1 resamples ## # A tibble: 1 x 6 ## splits id .metrics .notes .predictions .workflow ## * <list> <chr> <list> <list> <list> <list> ## 1 <split [2.2K… train/test … <tibble [2 ×… <tibble [0… <tibble [732 ×… <workflo… ``` --- .pull-left[ ```r fit_data( Sale_Price ~ Gr_Liv_Area, model, data ) ``` ] -- .pull-right[ ```r fit_split( Sale_Price ~ Gr_Liv_Area, model, * split ) ``` ] --- class: middle .center[ # Quiz The id column of `lm_split` contains a character vector with 1 row. What does the `splits` column contain? ] ```r lm_split <- fit_split(Sale_Price ~ Gr_Liv_Area, model = lm_spec, * split = ames_split) lm_split ## # # Monte Carlo cross-validation (0.75/0.25) with 1 resamples ## # A tibble: 1 x 6 ## splits id .metrics .notes .predictions .workflow ## * <list> <chr> <list> <list> <list> <list> ## 1 <split [2.2K… train/test … <tibble [2 ×… <tibble [0… <tibble [732 ×… <workflo… ``` --- class: inverse, middle, center A .display[list]! <!-- --> --- class: middle, center # Quiz How are data frames related to lists? --- class: middle, center # Quiz Can lists contain other lists? --- class: middle # A simpler list ```r simple_split <- lm_split %>% select(splits, id) simple_split ## # # Monte Carlo cross-validation (0.75/0.25) with 1 resamples ## # A tibble: 1 x 2 ## splits id ## * <list> <chr> ## 1 <split [2.2K/732]> train/test split ``` --- class: inverse # 🐈, ⚡ How can you return just the contents of the first cell in splits? Write the code. ```r simple_split ## # # Monte Carlo cross-validation (0.75/0.25) with 1 resamples ## # A tibble: 1 x 2 ## splits id ## * <list> <chr> ## 1 <split [2.2K/732]> train/test split ``` <div class="countdown" id="timer_5e46e314" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">01</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- class: middle ```r simple_split %>% pluck("splits", 1) ## <2198/732/2930> simple_split[["splits"]][[1]] ## <2198/732/2930> simple_split[[1, 1]] ## <2198/732/2930> ``` --- class: middle, center # Quiz What is the difference between `[[` and `[` indexing? --- class: middle .columns[ .left-col[ ```r band_members # A tibble: 3 x 2 name band <chr> <chr> 1 Mick Stones 2 John Beatles 3 Paul Beatles ``` ] .middle-col[ ```r band_members[1] # A tibble: 3 x 1 name <chr> 1 Mick 2 John 3 Paul ``` ] .right-col[ ```r band_members[[1]] [1] "Mick" "John" "Paul" ``` ] ] --- background-image: url(images/listcols.001.jpeg) background-size: contain --- background-image: url(images/listcols.002.jpeg) background-size: contain --- background-image: url(images/listcols.003.jpeg) background-size: contain --- background-image: url(images/listcols.004.jpeg) background-size: contain --- class: middle ```r simple_split %>% pluck("splits", 1) ## <2198/732/2930> simple_split[["splits"]][[1]] ## <2198/732/2930> simple_split[[1, 1]] ## <2198/732/2930> ``` --- class: middle, center # `pluck()` Iterative `[[` indexing for lists ```r pluck(list, "name", 1, ...) ``` --- class: middle # What would this do? ```r simple_split %>% pluck("splits", 1) %>% testing() ``` --- class: middle # What would this do? ```r simple_split %>% pluck("splits", 1) %>% testing() ## # A tibble: 732 x 81 ## MS_SubClass MS_Zoning Lot_Frontage Lot_Area Street Alley Lot_Shape ## <fct> <fct> <dbl> <int> <fct> <fct> <fct> ## 1 One_Story_… Resident… 141 31770 Pave No_A… Slightly… ## 2 One_Story_… Resident… 80 11622 Pave No_A… Regular ## 3 One_Story_… Resident… 93 11160 Pave No_A… Regular ## 4 Two_Story_… Resident… 75 10000 Pave No_A… Slightly… ## 5 Two_Story_… Resident… 47 53504 Pave No_A… Moderate… ## 6 One_Story_… Resident… 88 11394 Pave No_A… Regular ## 7 One_Story_… Resident… 65 8450 Pave No_A… Regular ## 8 Two_Story_… Resident… 21 1680 Pave No_A… Regular ## 9 Two_Story_… Resident… 0 7851 Pave No_A… Regular ## 10 Two_Story_… Resident… 58 16770 Pave No_A… Moderate… ## # … with 722 more rows, and 74 more variables: Land_Contour <fct>, ## # Utilities <fct>, Lot_Config <fct>, Land_Slope <fct>, Neighborhood <fct>, ## # Condition_1 <fct>, Condition_2 <fct>, Bldg_Type <fct>, House_Style <fct>, ## # Overall_Qual <fct>, Overall_Cond <fct>, Year_Built <int>, ## # Year_Remod_Add <int>, Roof_Style <fct>, Roof_Matl <fct>, ## # Exterior_1st <fct>, Exterior_2nd <fct>, Mas_Vnr_Type <fct>, ## # Mas_Vnr_Area <dbl>, Exter_Qual <fct>, Exter_Cond <fct>, Foundation <fct>, ## # Bsmt_Qual <fct>, Bsmt_Cond <fct>, Bsmt_Exposure <fct>, ## # BsmtFin_Type_1 <fct>, BsmtFin_SF_1 <dbl>, BsmtFin_Type_2 <fct>, ## # BsmtFin_SF_2 <dbl>, Bsmt_Unf_SF <dbl>, Total_Bsmt_SF <dbl>, Heating <fct>, ## # Heating_QC <fct>, Central_Air <fct>, Electrical <fct>, First_Flr_SF <int>, ## # Second_Flr_SF <int>, Low_Qual_Fin_SF <int>, Gr_Liv_Area <int>, ## # Bsmt_Full_Bath <dbl>, Bsmt_Half_Bath <dbl>, Full_Bath <int>, ## # Half_Bath <int>, Bedroom_AbvGr <int>, Kitchen_AbvGr <int>, ## # Kitchen_Qual <fct>, TotRms_AbvGrd <int>, Functional <fct>, ## # Fireplaces <int>, Fireplace_Qu <fct>, Garage_Type <fct>, ## # Garage_Finish <fct>, Garage_Cars <dbl>, Garage_Area <dbl>, ## # Garage_Qual <fct>, Garage_Cond <fct>, Paved_Drive <fct>, ## # Wood_Deck_SF <int>, Open_Porch_SF <int>, Enclosed_Porch <int>, ## # Three_season_porch <int>, Screen_Porch <int>, Pool_Area <int>, ## # Pool_QC <fct>, Fence <fct>, Misc_Feature <fct>, Misc_Val <int>, ## # Mo_Sold <int>, Year_Sold <int>, Sale_Type <fct>, Sale_Condition <fct>, ## # Sale_Price <int>, Longitude <dbl>, Latitude <dbl> ``` --- class: middle # What would this do? ```r simple_split %>% pluck("splits") ``` --- class: middle # What would this do? ```r simple_split %>% pluck("splits") ## [[1]] ## <2198/732/2930> ``` --- class: inverse # 🐈, 🌩 Which tidyverse function could you use to run `testing()` on a row in splits? Complete the code to extract the test set as a list. Do not use a for loop. ```r simple_split %>% pluck("splits") %>% ________________ ``` <div class="countdown" id="timer_5e46e30b" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">01</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- ```r simple_split %>% pluck("splits") %>% map(testing) ## [[1]] ## # A tibble: 732 x 81 ## MS_SubClass MS_Zoning Lot_Frontage Lot_Area Street Alley Lot_Shape ## <fct> <fct> <dbl> <int> <fct> <fct> <fct> ## 1 One_Story_… Resident… 141 31770 Pave No_A… Slightly… ## 2 One_Story_… Resident… 80 11622 Pave No_A… Regular ## 3 One_Story_… Resident… 93 11160 Pave No_A… Regular ## 4 Two_Story_… Resident… 75 10000 Pave No_A… Slightly… ## 5 Two_Story_… Resident… 47 53504 Pave No_A… Moderate… ## 6 One_Story_… Resident… 88 11394 Pave No_A… Regular ## 7 One_Story_… Resident… 65 8450 Pave No_A… Regular ## 8 Two_Story_… Resident… 21 1680 Pave No_A… Regular ## 9 Two_Story_… Resident… 0 7851 Pave No_A… Regular ## 10 Two_Story_… Resident… 58 16770 Pave No_A… Moderate… ## # … with 722 more rows, and 74 more variables: Land_Contour <fct>, ## # Utilities <fct>, Lot_Config <fct>, Land_Slope <fct>, Neighborhood <fct>, ## # Condition_1 <fct>, Condition_2 <fct>, Bldg_Type <fct>, House_Style <fct>, ## # Overall_Qual <fct>, Overall_Cond <fct>, Year_Built <int>, ## # Year_Remod_Add <int>, Roof_Style <fct>, Roof_Matl <fct>, ## # Exterior_1st <fct>, Exterior_2nd <fct>, Mas_Vnr_Type <fct>, ## # Mas_Vnr_Area <dbl>, Exter_Qual <fct>, Exter_Cond <fct>, Foundation <fct>, ## # Bsmt_Qual <fct>, Bsmt_Cond <fct>, Bsmt_Exposure <fct>, ## # BsmtFin_Type_1 <fct>, BsmtFin_SF_1 <dbl>, BsmtFin_Type_2 <fct>, ## # BsmtFin_SF_2 <dbl>, Bsmt_Unf_SF <dbl>, Total_Bsmt_SF <dbl>, Heating <fct>, ## # Heating_QC <fct>, Central_Air <fct>, Electrical <fct>, First_Flr_SF <int>, ## # Second_Flr_SF <int>, Low_Qual_Fin_SF <int>, Gr_Liv_Area <int>, ## # Bsmt_Full_Bath <dbl>, Bsmt_Half_Bath <dbl>, Full_Bath <int>, ## # Half_Bath <int>, Bedroom_AbvGr <int>, Kitchen_AbvGr <int>, ## # Kitchen_Qual <fct>, TotRms_AbvGrd <int>, Functional <fct>, ## # Fireplaces <int>, Fireplace_Qu <fct>, Garage_Type <fct>, ## # Garage_Finish <fct>, Garage_Cars <dbl>, Garage_Area <dbl>, ## # Garage_Qual <fct>, Garage_Cond <fct>, Paved_Drive <fct>, ## # Wood_Deck_SF <int>, Open_Porch_SF <int>, Enclosed_Porch <int>, ## # Three_season_porch <int>, Screen_Porch <int>, Pool_Area <int>, ## # Pool_QC <fct>, Fence <fct>, Misc_Feature <fct>, Misc_Val <int>, ## # Mo_Sold <int>, Year_Sold <int>, Sale_Type <fct>, Sale_Condition <fct>, ## # Sale_Price <int>, Longitude <dbl>, Latitude <dbl> ``` --- class: middle, center # `map()` Applies a function to every element of a list. Returns the results as a list. ```r map(.x, .f, …) ``` -- *"for every element of `.x` do `.f`"* --- class: middle .center[ # `map()` Applies a function to every element of a list. Returns the results as a list. ] ```r map( .x, # list or vector .f, # function to apply to each list element ... # other args to pass to function ) ``` --- class: middle, center <!-- --> .footnote[From [Adv R](https://adv-r.hadley.nz/functionals.html)] --- class: inverse # 🐈, 🌩 Complete the code! Use a `dplyr` function to add the training data as a list column to the `lm_split` table. ```r simple_split %>% __________________ ``` ``` ## # # Monte Carlo cross-validation (0.75/0.25) with 1 resamples ## # A tibble: 1 x 3 ## splits id train_set ## * <list> <chr> <list> ## 1 <split [2.2K/732]> train/test split <tibble [2,198 × 81]> ``` <div class="countdown" id="timer_5e46e385" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">01</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- ```r simple_split %>% mutate(train_set = map(splits, training)) ## # # Monte Carlo cross-validation (0.75/0.25) with 1 resamples ## # A tibble: 1 x 3 ## splits id train_set ## * <list> <chr> <list> ## 1 <split [2.2K/732]> train/test split <tibble [2,198 × 81]> ``` --- class: middle, center | | List | Atomic | Same type | Nothing | |-----------------------|----------|-------------------|-------------|-----------| | One argument | `map()` | `map_lgl()`, ... | `modify()` | `walk()` | | Two arguments | `map2()` | `map2_lgl()`, ... | `modify2()` | `walk2()` | | One argument + index | `imap()` | `imap_lgl()`, ... | `imodify()` | `iwalk()` | | N arguments | `pmap()` | `pmap_lgl()`, ... | --- | `pwalk()` | .footnote[From [Adv R](https://adv-r.hadley.nz/functionals.html)] --- class: middle, center # `unnest()` Unnests one or more list columns ```r .Last.value %>% unnest(train_set) ``` --- ```r simple_split %>% mutate(train_set = map(splits, training)) %>% * unnest(train_set) ## # A tibble: 2,198 x 83 ## splits id MS_SubClass MS_Zoning Lot_Frontage Lot_Area Street Alley ## <list> <chr> <fct> <fct> <dbl> <int> <fct> <fct> ## 1 <spli… trai… One_Story_… Resident… 81 14267 Pave No_A… ## 2 <spli… trai… Two_Story_… Resident… 74 13830 Pave No_A… ## 3 <spli… trai… Two_Story_… Resident… 78 9978 Pave No_A… ## 4 <spli… trai… One_Story_… Resident… 41 4920 Pave No_A… ## 5 <spli… trai… One_Story_… Resident… 43 5005 Pave No_A… ## 6 <spli… trai… One_Story_… Resident… 39 5389 Pave No_A… ## 7 <spli… trai… Two_Story_… Resident… 60 7500 Pave No_A… ## 8 <spli… trai… One_Story_… Resident… 0 7980 Pave No_A… ## 9 <spli… trai… Two_Story_… Resident… 63 8402 Pave No_A… ## 10 <spli… trai… One_Story_… Resident… 85 10176 Pave No_A… ## # … with 2,188 more rows, and 75 more variables: Lot_Shape <fct>, ## # Land_Contour <fct>, Utilities <fct>, Lot_Config <fct>, Land_Slope <fct>, ## # Neighborhood <fct>, Condition_1 <fct>, Condition_2 <fct>, Bldg_Type <fct>, ## # House_Style <fct>, Overall_Qual <fct>, Overall_Cond <fct>, ## # Year_Built <int>, Year_Remod_Add <int>, Roof_Style <fct>, Roof_Matl <fct>, ## # Exterior_1st <fct>, Exterior_2nd <fct>, Mas_Vnr_Type <fct>, ## # Mas_Vnr_Area <dbl>, Exter_Qual <fct>, Exter_Cond <fct>, Foundation <fct>, ## # Bsmt_Qual <fct>, Bsmt_Cond <fct>, Bsmt_Exposure <fct>, ## # BsmtFin_Type_1 <fct>, BsmtFin_SF_1 <dbl>, BsmtFin_Type_2 <fct>, ## # BsmtFin_SF_2 <dbl>, Bsmt_Unf_SF <dbl>, Total_Bsmt_SF <dbl>, Heating <fct>, ## # Heating_QC <fct>, Central_Air <fct>, Electrical <fct>, First_Flr_SF <int>, ## # Second_Flr_SF <int>, Low_Qual_Fin_SF <int>, Gr_Liv_Area <int>, ## # Bsmt_Full_Bath <dbl>, Bsmt_Half_Bath <dbl>, Full_Bath <int>, ## # Half_Bath <int>, Bedroom_AbvGr <int>, Kitchen_AbvGr <int>, ## # Kitchen_Qual <fct>, TotRms_AbvGrd <int>, Functional <fct>, ## # Fireplaces <int>, Fireplace_Qu <fct>, Garage_Type <fct>, ## # Garage_Finish <fct>, Garage_Cars <dbl>, Garage_Area <dbl>, ## # Garage_Qual <fct>, Garage_Cond <fct>, Paved_Drive <fct>, ## # Wood_Deck_SF <int>, Open_Porch_SF <int>, Enclosed_Porch <int>, ## # Three_season_porch <int>, Screen_Porch <int>, Pool_Area <int>, ## # Pool_QC <fct>, Fence <fct>, Misc_Feature <fct>, Misc_Val <int>, ## # Mo_Sold <int>, Year_Sold <int>, Sale_Type <fct>, Sale_Condition <fct>, ## # Sale_Price <int>, Longitude <dbl>, Latitude <dbl> ``` --- class: middle, center, inverse <!-- --> --- class: middle, center # Quiz How we can expand a list column to see what is in it? -- `tidyr::unnest()` .footnote[https://tidyr.tidyverse.org/reference/unnest.html] --- ```r lm_split %>% unnest(.metrics) ## # A tibble: 2 x 8 ## splits id .metric .estimator .estimate .notes .predictions .workflow ## <list> <chr> <chr> <chr> <dbl> <list> <list> <list> ## 1 <split [… train/… rmse standard 53885. <tibbl… <tibble [732… <workflo… ## 2 <split [… train/… rsq standard 0.568 <tibbl… <tibble [732… <workflo… ``` --- class: middle, center # `collect_metrics()` Unnest the metrics column from a tidymodels `fit_split()` ```r split_results %>% collect_metrics() ``` --- class: your-turn # Your turn 6 Rewrite your code from the previous exercise using `fit_split()` and `collect_metrics()` to: 1. Split **ames** into training and test sets. Save the rsplit! 1. Fit a linear model to the training set, then use the model to predict new observations from the test set. 1. Extract the rmse- is it the same as what we just calculated in our previous exercise 53885? Keep `set.seed(100)` at the start of your code. <div class="countdown" id="timer_5e46e4f3" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">02</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- ```r set.seed(100) # Important! ames_split <- initial_split(ames) lm_split <- fit_split(Sale_Price ~ Gr_Liv_Area, model = lm_spec, split = ames_split) lm_split %>% collect_metrics() ## # A tibble: 2 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 53885. ## 2 rsq standard 0.568 ``` --- class: middle, center # `decision_tree()` Specifies a decision tree model ```r decision_tree(tree_depth = NULL, min_n = NULL, cost_complexity = NULL) ``` --- class: your-turn # Your turn 7 Write a pipe to create a model that uses the rpart package to fit a regression tree. Use `fit_split()` and `collect_metrics()` to compare the RMSE here to one using the linear model for the same formula- which is better? *Hint: you'll need https://tidymodels.github.io/parsnip/articles/articles/Models.html* <div class="countdown" id="timer_5e46e49d" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">03</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- .pull-left[ ```r rt_spec <- decision_tree() %>% set_engine(engine = "rpart") %>% set_mode("regression") set.seed(100) # Important! fit_split(Sale_Price ~ Gr_Liv_Area, model = rt_spec, split = ames_split) %>% collect_metrics() ## # A tibble: 2 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 56055. ## 2 rsq standard 0.529 ``` ] .pull-right[ ```r set.seed(100) # Important! fit_split(Sale_Price ~ Gr_Liv_Area, model = lm_spec, split = ames_split) %>% collect_metrics() ## # A tibble: 2 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 53885. ## 2 rsq standard 0.568 ``` ] --- class: middle, center # `nearest_neighbor()` Specifies a KNN model ```r nearest_neighbor(neighbors = 1) ``` --- class: your-turn # Your turn 8 Write *another* pipe to create a model that uses the kknn package to fit a K nearest neighbors model. Use `fit_split()` and `collect_metrics()` to compare the RMSE here to our other models with the same formula- which is better? *Hint: you'll need https://tidymodels.github.io/parsnip/articles/articles/Models.html* <div class="countdown" id="timer_5e46e2c6" style="right:0;bottom:0;" data-warnwhen="0"> <code class="countdown-time"><span class="countdown-digits minutes">03</span><span class="countdown-digits colon">:</span><span class="countdown-digits seconds">00</span></code> </div> --- .pull-left[ ```r knn_spec <- nearest_neighbor() %>% set_engine(engine = "kknn") %>% set_mode("regression") set.seed(100) # Important! fit_split(Sale_Price ~ Gr_Liv_Area, model = knn_spec, split = ames_split) %>% collect_metrics() ## # A tibble: 2 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 59074. ## 2 rsq standard 0.485 ``` ] .pull-right[ `lm_spec` ``` ## # A tibble: 2 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 53885. ## 2 rsq standard 0.568 ``` `rt_spec` ``` ## # A tibble: 2 x 3 ## .metric .estimator .estimate ## <chr> <chr> <dbl> ## 1 rmse standard 56055. ## 2 rsq standard 0.529 ``` ] --- class: middle # .center[`fit_split()`] .center[.fade[Trains and tests a model with split data. Returns a tibble.]] ```r fit_split( formula, model, split, * metrics = NULL ) ``` If `NULL`, `rmse` and `rsq` when mode = "regression" --- class: middle, center # `metric_set()` A helper function for selecting yardstick metric functions. ```r metric_set(rmse, rsq) ``` --- class: middle, center <iframe src="https://tidymodels.github.io/yardstick/articles/metric-types.html#metrics" width="504" height="400px"></iframe> https://tidymodels.github.io/yardstick/articles/metric-types.html#metrics --- class: middle # .center[`fit_split()`] .center[.fade[Trains and tests a model with split data. Returns a tibble.]] ```r fit_split( formula, model, split, * metrics = metric_set(rmse) ) ``` --- class: middle, center, frame # How do we pick? -- **Which** .display[data] -- **Which** .display[criteria] -- **Which** .display[model]